5 minute read time

The Problem

We are in the exponential growth phase of Large-Language Models (LLMs). There are new releases of OpenAI’s GPT, Mistral, and Gemini every few weeks and they are getting smarter. Under the hood, there is a tug-of-war going on between increased intelligence and the speed at which it responds to the user. Currently, speed is losing the war to intelligence and thus the experience for users within the extremely intelligent applications being released is far from enjoyable. This experience is further ruined by plain LLMs like ChatGPT running extremely fast because they are past the cost-of-scale hurdle and are less complex operation pipelines in nature. This article aims at diving in deep into two areas that we focus on at PressW: optimizing time to first token and showing intermediate LLM steps as user experience tricks to help your application feel closer to the current golden standard of ChatGPT.

User Experience Techniques

Now that LLM applications have become mainstream, there are a few techniques that have come into play to create an engaging and pleasant user experience.

Time-To-First Token

Traditionally, applications measure latency and response time of the requests that the interface makes to the backend to run operations. This metric is something that backend systems developers are continually trying to improve in systems so that the user can have a quick experience within the application. A few examples where this metric are used are:

Time to complete a user login

Fetching data from a database

Processing a user’s uploaded avatar image

LLM applications have a different metric that is extremely important to the user’s experience: time-to-first token. This metric takes into account the time that the system takes to send back the very first LLM token to the user. When putting these two numbers side-by-side, they can be drastically different values.

As you can see in the table above, the time to first token is ~2 seconds. This is the time the user spends waiting for feedback from the system. Complex LLM systems will see this number increase as well as you add in agents, tools, and RAG systems (check out this article on RAG). When you optimize the time to first token as much as you can within your system, it’s time to employ other UX tricks to provide a great user experience.

Ways to optimize this metric

Response Streaming

Implementing response streaming is the very first step in optimizing the time to first token metric. It provides the system with a way to return partial responses. This immediately reduces the metric as we are not waiting on the LLM to fully finish it’s thought before it starts sending data back to the user’s application window. This is the main factor in why ChatGPT feels so fast even when it is returning an extremely long-winded answer.

Backend Parallelization

Modern LLM tooling for backend developers allows them to make certain requests in parallel. Many complex LLM applications rely on multiple agents to generate intermediate outputs and understandings of problems. You can then parallelize these operations and reduce the latency to the final LLM that will then start streaming responses back to the users of the application.

Non-Parallel Backend

Parallel Backend

As you can see with the above example, we are able to condense the steps that the first backend system was doing into a much shorter step via parallelizing the system. This is definitely a more advanced technique that developers will need to spend time on to understand the different data dependencies and where it makes sense to run operations in parallel, but it is a powerful technique when used effectively.

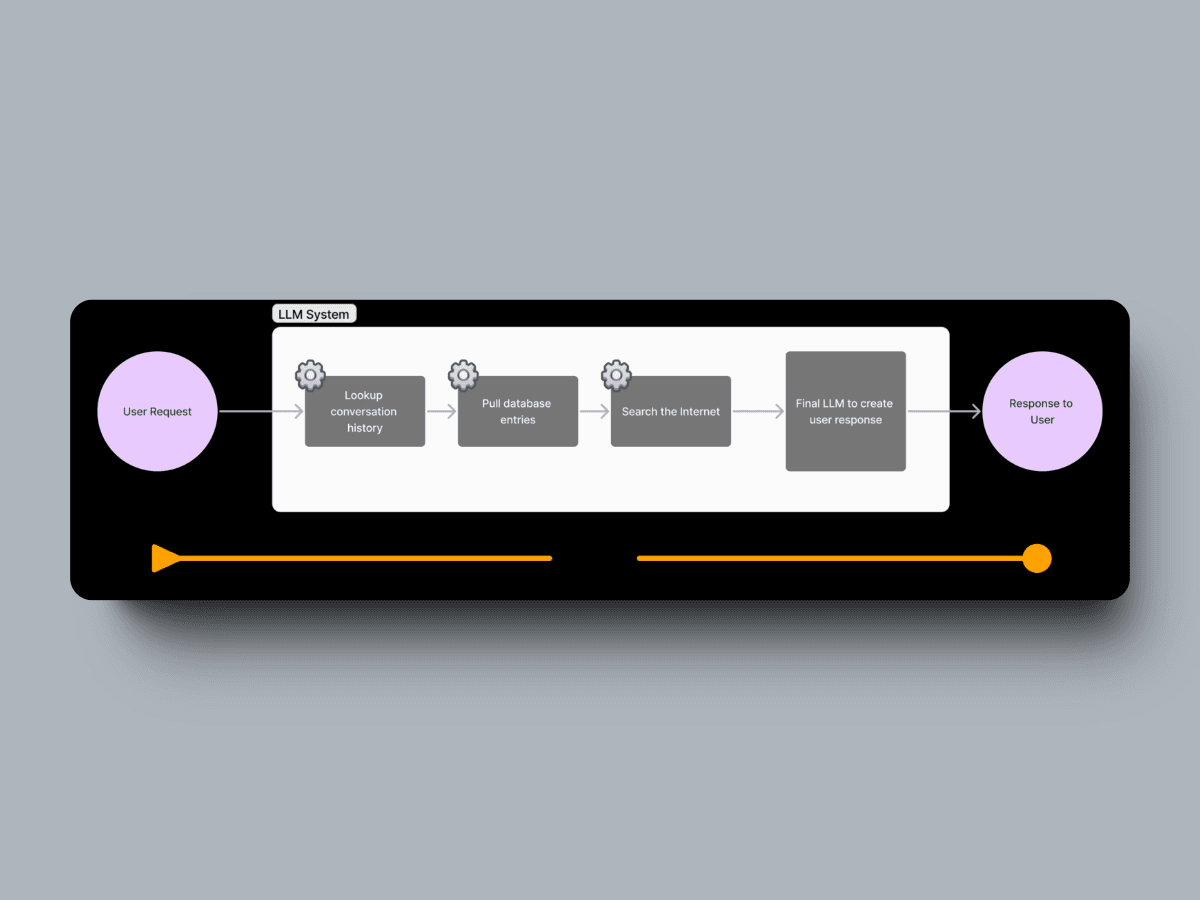

Showing Intermediate Steps

The above diagrams show extremely simplified versions of LLM applications. In production applications, there are going to be even more steps that the system has to go through in order to even begin returning tokens to users.

Example production pipeline

These intermediate steps are always going to have to happen and leave the user in a state of not knowing what is going on. Nielsen Norman Group has a set of heuristics that you can read about here that PressW abides by. I’m going to highlight the key one for this pain point: Visibility of system status.

The N/N Group’s guidelines for this heuristic are:

The design should always keep users informed about what is going on, through appropriate feedback within a reasonable amount of time.

When users know the current system status, they learn the outcome of their prior interactions and determine next steps. Predictable interactions create trust in the product as well as the brand.

LLM systems by and large can be transparent and provide insights into the inner mechanisms of what the current state are. Streaming the responses back (as described above) is not only limited to final responses! Using modern tooling, the frontend application can actually get the status of the system as a whole back. At PressW, we use this intermediate data to provide out what we call the “pizza tracker experience”.

Real-World Examples

Dominos Tracker

If you’ve ordered Dominos online in the past decade, you are probably familiar with their Dominos Tracker. This is a prime example of an opaque system, the process of preparing a pizza from order to pickup, that has been shattered open to provide users with a clear visual of what the current state of the system is.

LLM applications can use the same concepts in order to provide users with feedback even when complex and long-running processes (30+ seconds) are taking place in the background to get the user the answers that they expect from a system.

Perplexity is an LLM-based search engine. It takes in standard queries that a user would type into Google such as “Who is the quarterback of the Kansas City Chiefs” and then returns text-based answers with inline source citations. It’s very similar to Bing’s AI search if you have used that.

What is unique about Perplexity is their Pro Search feature. When enabled, the system becomes interactive and performs much more complex actions on behalf of a user. If I want to create a whole dinner menu that involves meatballs and red wine, I enable pro search and type in my request.

Steps in the experience:

Perplexity takes in a query

Asks follow-up questions

Takes in answers

Creates new search queries

Cites sources

Generates an answer (streamed back to me)

Example Experience

Below, I have asked Perplexity “Help me create a dinner menu that involves meatballs and red wine”. The first thing that the system does is show a loading indicator to understand my question, and then asks me some follow up questions.

Once I have answered the questions, it goes through and shows me, linearly, the actions that it is taking to fully answer my question. This linear loading is a technique that addresses the visibility issue into what’s going on within the system.

The next step that the system goes through is to begin streaming back the response to the users and displaying the sources that it used. We have now gotten our first tokens back and the user is happy that they are receiving their answer (and a delicious sounding recipe).

Perplexity is a great case study in showing the intermediate steps of the workflow that the system is going through. They provide enough contextual information for users to understand their query and provide out the best answer that it can without it seeming like there is a black box and they are waiting with no context.

Final Example: Custom GPT Creation

OpenAI has a recently released feature of their ChatGPT platform that allows users to create their own specialized versions of GPT. As part of this process, the user creates the personality, tone, and system prompt of the custom GPT through a modified chat window. When the system receives an input from the user to configure the GPT and needs to make backend changes, the user sees specialized loading indicators that provide visibility into what OpenAI is doing in the background.

The above screenshot shows a sample loading message for the system where OpenAI is using a backend service to generate the profile photo of the custom GPT. This loading indicator eventually gets replaced with the text response that is streamed back to the user saying that it was a success and asking the next follow-up questions. We have an article here on creating your own custom GPT, check it out!

Note on Loading Indicators

Loading indicators such as this can be a great way of providing users with updates into an LLM system, even if there is not streaming support. Good communication between the product team and engineering can allow for psuedo-visibility into the system. An example of this is a timer-based loading indicator that cycles between different messages to show that multiple things are happening in the background, even if the interface is not sure which step is happening at the current moment.

Source: https://dribbble.com/shots/11342077-Revolver-Loading-Indicator-Animation

Conclusion

User experience in LLM applications is crucial and can be enhanced by optimizing the time to the first token and showing intermediate steps of the process. Techniques such as response streaming and backend parallelization can improve the time-to-first-token metric, while providing visibility into system status can enhance user engagement.

The future of LLM applications is exciting, and by placing a strong emphasis on user experience, developers and application owners can ensure they stay ahead of the curve, delivering applications that are not only technologically advanced but also user-friendly and intuitive. In a world where user satisfaction is paramount, the importance of these techniques cannot be overstated.