Welcome to the fascinating world of AI prompting, where machines are taught to think with the clarity and precision of humans. In this article, I wanted to share how we used two sophisticated LLM prompting techniques, Chain of Thought and Agentic, to help us solve a difficult challenge at PressW. While working on a complex AI application, I was faced with the challenge of generating high-quality synthetic data in order to build our proof of concept.

Simply going to ChatGPT and asking it to “generate high quality financial data for a SaaS company”, obviously wasn’t going to cut it. I needed to create a system that allowed me to generate high-quality as close to real data as possible, efficiently, using LLMs. Throughout my journey I utilized some powerful techniques and would love to share how they help demonstrate just how powerful AI’s problem-solving capabilities really are.

Background Knowledge

Chain of Thought Prompting

What exactly is an algorithm? Most explanations boil down to “a sequence of specific stepsˮ (Elaine Kant).

We humans are constantly creating our own algorithms. Algorithms are not constrained to a programming language but are fundamental to how humans approach large problems: we break the task into individual, manageable, detailed chunks, and attack them in order of priority and dependencies.

An excellent example of algorithmic thinking is the step-by-step process of brewing tea. Why? The recipe for making a cup of tea invokes specific and detailed functions to achieve the final goal.

→ Start by bringing 1.5 cups of water per person to a boil.

→ Add tea leaves, spices, sugar, and ¼ cups of milk.

→ Strain out and serve until the desired color is achieved.

This sequence of actions seems rather straightforward to us humans. In reality, this each of these steps can be broken into its own complex algorithm. For example, the function to boil 1.5 cups of water can be decomposed into:

→ Fetch a flat-bottomed pan from a cupboard.

→ Measure 1.5 cups of water.

→ Pour the measured water into the pan.

→ Ignite the stove and place the pan on high heat.

→ Wait for the water in the pan to boil.

By breaking down a task into smaller and smaller sub-tasks and steps, we can very effectively create a recipe (pun intended) to reproduce results. In fact, this process of breaking problems down and ordering their constituents extends into the world of AI, birthing one of the most powerful prompting techniques discovered thus far.

This is the core concept behind Chain of Thought Prompting, a technique we use in AI to guide the intelligent models to execute complex tasks. (Hint: This is very similar to how you would train a person to do a new task as well!).

Agentic Prompting

Here's another fundamental concept in the world of AI: Agents.

To help you understand what an agent is, let's break it down using another real world example. If you are a line cook at a local restaurant, we can assume that you possess knowledge of many algorithms in the same domain, such as making breakfast items. If we order some tea, you automatically know its recipe and start executing its algorithm.

→ Start by bringing 1.5 cups of water per person to a boil.

→ fetch → measure → pour → ignite → wait

→ Add tea leaves, spices, sugar, and ¼ cups of milk.

→ … (sub algorithm)

→ Strain out and serve until the desired color is achieved.

→ … (sub algorithm)

You exist as an autonomous line cook agent here. Due to your profession, we can assume you know multiple complete algorithms AND how to execute their subparts. A customer doesn't need to re-state a dish's entire recipe while placing the order, because you were trained to know the algorithm on your own!

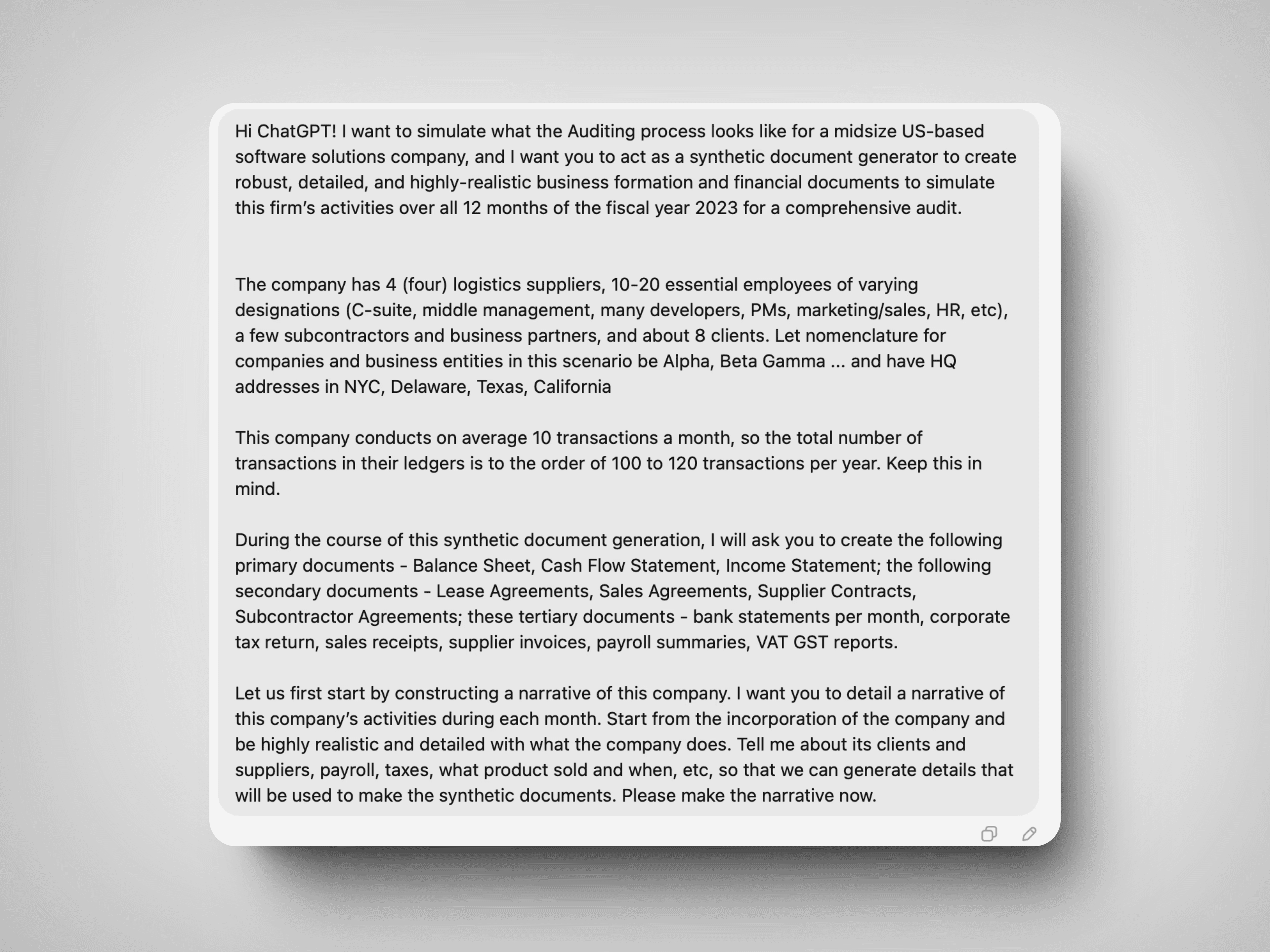

This analogy holds when prompting a Large Language Model LLM. When you direct the LLM to assume the role of an agent, there is an implicit understanding that the LLM possesses the same knowledge or expertise a human would in that role professionally. You can leverage the agentʼs pre-trained skill set to instruct the LLM abstractly and not go into extreme detail during prompting, such as in the image below. This technique is called Agentic Prompting.

A basic example of Agentic Prompting on GPT-4o. The agent here is a travel guide with in-built functionality of knowing general trip schedules, knowing NYC geography, and creating a 3 day trip based on client guidelines.

Agentic Prompting for PressW

Why?

LLMs are trained on a large corpus such as research papers, novels, websites, etc. OpenAI trained their GPT3 and GPT4 series models on the entirety of the internet, putting the Large in Large Language Models! The big unlock here is that by training on such a vast quantity of real world data, these models have developed a common sense understanding of the world and how it works.

As a by-product of all this training, LLMs are extremely potent in mimicking real-life situations and generating synthetic data. Synthetic data is fictional but hyper-realistic data and can be created for almost any context due to LLM’s large training set.

PressW is currently building an Accounting Reconciler aka Document Review Assistant, training an LLM to run pre-audit automations for financial audits. Put very simply, this Document Review Assistant looks through pages of financial data and ensure all the numbers add up i.e. reconcile across the entire document set. It also identify discrepancies and compliance issues, wrapping its findings into a detailed report with document summaries for auditors.

As part of this project (which I am leading), I needed to simulate the financial and business operations of a midsize SaaS company, building a baseline document set to test this pipeline. This gave us a perfect situation to test the current limits of LLM capabilities. A challenging problem with LLMs today is receiving and reasoning through a high volume of overlapping details and data. This is compounded when dealing with tabular data (like excel sheets or embedded tables), which is ubiquitous in the financial world. Synthetic documents were a logical choice to build the document set, but inputting high-quality data is the key to success here.

I start by laying out the situation to the LLM and provide it with relevant context paragraph (1). I also instantiate an agent for future use. Then, I provide extremely specific details in paragraphs (2) and (3) for the upcoming narrative and document generation. In paragraph (4), I provide further context on the LLM’s task, but provide the immediate task in paragraph (5) of generating the narrative along with necessary parameters.

GPT can only think by “writing out its thoughtsˮ (John Furneaux), so configuring vital details explicitly forces the to LLM devote computation to the contents of the document set, pre-setting a large amount of only contextually relevant data. It was incredibly important for me to create a backstory for the company first. Had I not done so, the LLM would have set a few cursory details internally and generated documents as a black box operation, preventing me from cross-referencing the reconciliation of the resulting documents.

In practice, this narrative can be continuously referenced during the generation, negating the need to type in details specific to a document every time, and ensuring the transactions reconcile if the LLM sticks to the summary. Furthermore, ChatGPT often gets exhausted or errors out mid-document when generating a large document set. When this happens, I can simply copy-paste this narrative into the prompt of a new ChatGPT conversation and continue manufacturing the remaining documents. This retains the partial generation work done by the first conversation.

By contrast, if this narrative was not written out first and the GPT conversation timed out, the internal backstory details would be lost and we would need to regenerate ALL the documents so they align with each other. Thus, setting a narrative first helps verify GPTʼs understanding of your instructions, and to prevent wasting time and resources if you end up batching the generation.

A selected portion of the narrative constructed for TechWave solutions. You can clearly see the business activities ordered by date with numerical and monetary figures provided. There is a monthly cash flow running at the bottom to manually tally figures during a pre-generation sanity check.

This concludes the "setup". We've given GPT a ton of context about our midsize SaaS business, TechWave, and have simulated its financial year via business activities, relevant monetary figures, and dates. We can finally start generation!

Start with a template, being built here for a bank statement.

Intermediate - GPT simply fills in the document with details from the narrative.

Finally, GPT generates the April 2023 bank statement as a PDF.

The first page of a synthetic lease agreement, generated via a similar process:

Page 1/5 of a Lease Agreement used. This secondary document contains the rent per month, principal place of business, lease terms, clauses outlining liability, lease purpose, authorized signatories etc. The terms of the lease are highly templatized and were filled in with minor but important details from the summary narrative.

The Takeaway

Let's review.

There are many situations where synthetic data is the fastest path forward. This is exactly where we found ourselves at PressW 1 month ago. We needed baseline data to test our system, but lacked the necessary data to run the audit. Sourcing realistic documents from financial partners would significantly block our development timelines, so I leveraged Agentic and Chain of Thought prompting techniques to produce high quality, relevant, and large volume synthetic data.

Now I want to be clear. There is a classic time-cost tradeoff here, with cost being the quality of the output. This certainly is more time-intensive than trivially prompting the LLM with “generate financial, legal, and supporting documents for a SaaS companyˮ and allowing GPT to internally detail the files. This one line prompt is called one-shot prompting and generally leads to poor results. We don't want that - good input leads to good output, which is what this agentic step-by-step process accomplishes.

When you’re generating multiple interdependent documents, finalizing a cohesive summary and passing it into the prompt syncs documents with each other, reduces hallucinations (fake info) introduced in the documents, and ensures reproducibility in case a document is incompletely generated.

Thus, to obtain high-quality results while interacting with an LLM, you should drive the workflow by specifying the task of the AI agent to set implicit knowledge of the domain and provide a sequence of specific steps during your chain of thought reasoning. When generating synthetic documents, also decide on relevant cross-document details beforehand for the LLM to continuously reference and prevent information losses.

P.S. Stay tuned for my exciting follow-up where I break down our new Document Review Assistant project, where we truly push the limits of LLM understanding with a special focus on the auditing space!

More Resources

What are Large Language Models? - LLM AI Explained - AWS

References

Kant, Elaine. "Understanding and automating algorithm design." IEEE Transactions on Software Engineering 11 (1985): 1361-1374

Furneaux, John. “You Can Break GPT-4 with One Prompt Tweak.” LinkedIn, 22 May 2023, www.linkedin.com/pulse/you-can-break-gpt-one-prompt-tweak-john-furneaux-lotpe. Accessed 5 Aug. 2024.

About the Author

Aniket Gupta is an AI/ML enthusiast and a CS undergraduate at the University of Texas at Austin.

If you’re interested in talking further, feel free to reach out at www.linkedin.com/in/aniketgupta25/